Recently working on getting Oracle Sales Cloud integrated to Siebel UCM for Accounts. Oracle Sales Cloud can read a WSDL specification from a URL, but you still have to build the request message using Groovy script (would be nice to shoot whoever designed this). So you have to use Groovy script and write a global function to build up the message. Turns out, you can only add elements and attributes to the message which is already in the parsed WSDL. Nothing new can be added. © 2016 cleartext.blogspot.com

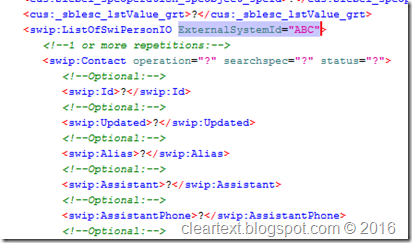

The problem here is that for integrations to Siebel UCM, a hidden attribute named ExternalSystemId has to be populated in the incoming message. This attribute is not in the WSDL when it gets generated from Siebel UCM. But it has to be sent in the SOAP request (would also be nice to shoot whoever designed this). © 2016 cleartext.blogspot.com

Error invoking service 'UCM Transaction Manager', method 'SOAPExecute' at step 'Transaction Manager'.(SBL-BPR-00162)

--

<?> Failed to find ExternalSystemId in input message(SBL-IAI-00436)

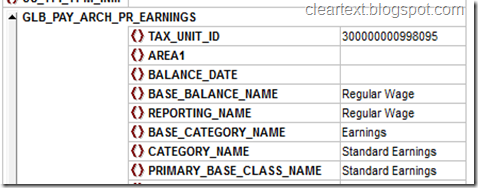

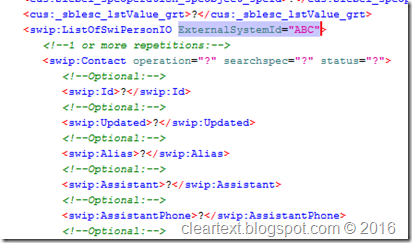

This is what you get when you consume the UCM WSDL in SOAP UI.

The actual message has to be (see highlighted changes) © 2016 cleartext.blogspot.com

If you add the groovy script to add this attribute, OSC will simply ignore it, and the attribute is not send to UCM. The only viable workaround is to Edit the WSDL AFTER it is generated, but BEFORE it is given to Sales Cloud ! © 2016 cleartext.blogspot.com

1: Generate the WSDL from UCM.

2: Open it in an XML editor , use XMLSPY if you have it. © 2016 cleartext.blogspot.com

3: Find the definition of the top container element int the WSDL: © 2016 cleartext.blogspot.com

4: Add this text (highlighted) : © 2016 cleartext.blogspot.com

<xsd:attribute name="ExternalSystemId" type="xsd:string"/>

5: Now validate, save and upload this WSDL to your public folder from where OSC can read it. OSC does not consume WSDLs, but reads the definition on the fly. © 2016 cleartext.blogspot.com

6: Now add the groovy script to populate this new attribute with the registered SystemId Name.

Phew !!

© 2016 cleartext.blogspot.com© 2016 cleartext.blogspot.com