Aahh…multilingual. That word increases the complexity of any project intantly. When your enterprise application is multilingual, it means users will be able to add and edit data in different languages, and that the data can no longer be stored in ASII/ANSI format. East Asian, and Middle Eastern and some European languages require more than one byte for a single charachter, so it has to be stored in Unicode format. While working on Taleo’s TCC scripts, I recently hit a roadblock with multilingual data, but the fantastic folks at Taleo had already solved the problem. © cleartext.blogspot.com 2016

TCC’s pipelines handle data in UTF-8 format, but many enterprise systems will produce output in UTF-16. So which one is better ? There is a common misconception that UTF-8 can not store all language charachters, and that UTF-16 is required. That’s not true. UTF-8 has an amazing awesome format, and it can depict every Unicode charachter, same as UTF-16. Here is a spectucular explanation of this miracle.

So if you get data in UTF-16 (or any of the other formats), how do you load them via TCC ? In the configuration file, TCC provides an option to set the encoding of the source file. © cleartext.blogspot.com 2016

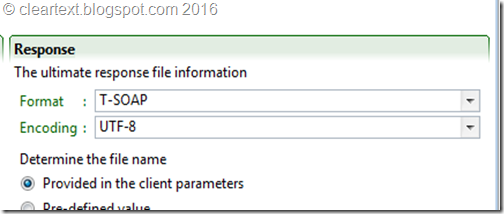

If you know the encoding of the source, you enter it here. This will work for standard Import files. There is also an option to set the response encoding.

But some complicated TCC configurations, like NetChange, require the pipeline data to be in UTF-8. So how do you get around that ?

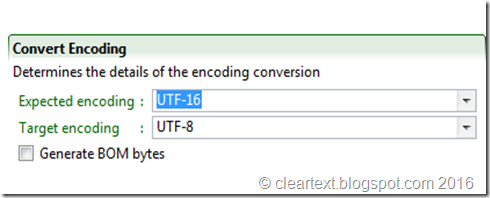

The encoding can be changed in the configuration file. Go to Pre-Processing tab, and add a new step.

There is an option to add an ‘Convert Encoding’ step. © cleartext.blogspot.com 2016

Choose the source encoding, and set the target as UTF-8. This step has to be the first step in your NetChange configuration file.

Thats it ! cleartext.blogspot.com